How does Information move through Space and Time?

In the prior article the Author examined how the Living Algorithm’s Algebra leads to the notions of Data Stream Velocity & Acceleration. But for something to have velocity or acceleration, it must first move through space and time. It’s easy to imagine an object propelling from one location to another over time. In fact, this describes the world we live in, or at least the world we conceive. As we amble around our environment at irregular speeds, we move through space and time. However, what is it for information to move through space and time? The Author showed us how to calculate the velocity and acceleration of a data stream. But how are we to conceive of this motion? In what medium does this action of a flow of information occur? What does it mean for the information of a data stream to move through space and time?

To understand this counter-intuitive notion it is necessary to highlight the similarities and differences between Space and Time in Physics and the Living Algorithm. Read on to see how we ended up in this intriguing location – in-between the space-time matrices of external matter and internal information processing.

Information Processing Bridges Internal & External Worlds

The foundations are in place. Let the investigation commence.

External World constitutes Objective Reality

We hold the notion that the external world, where matter moves through space over time, constitutes objective reality. However, this theory is derived from sensory data that we have digested. We have confirmed this theory so many times experientially and experimentally that we take it to be truth – just as we take gravity to be real. (To see how we determine reality see the three affirmations.)

Sensory Mechanisms evolve to support theory of Objective Material Reality

In service of this analysis (that there is an objective material reality), our senses immediately translate environmental input in a way that further supports the theory. Our two ears process auditory information to create a sense of space. Our two eyes process visual information to emphasize and extend this notion of space. These two senses along with the senses of smell, touch and acceleration form an informational redundancy loop that creates a sensitive, sophisticated and complete sensory picture of the external world.

Internal Information Digestion creates notion of External World

The external world of matter does not need the internal world of data processing to exist, but it needs the internal world of data processing to be perceived. As the ultimate information processors, humans perceive and create the ‘objective’ external world of matter via a 'practical' infinity of data streams that are digested by our senses. Due to its practical efficacy, our senses have been programmed to digest data to support the theory. As such, our perception of a common sense world where matter exists in a specific location in time and space is dependent upon our talents as a data processor. Accordingly, the theory of an objective external reality is a special case, just one of a multitude of ways in which we organize information. It is evident that internal information processing is the bridge that joins the internal and external worlds.

External vs. Internal regarding Time & Space

Although the brain (our central processor) digests the same sensory data streams to create the two worlds, there are many differences between the external world of matter and the internal world of information processing. Space, matter and time are viewed as continuous and permanent in our external ‘objective’ world. Our senses confirm this notion of reality so regularly that it is no longer in doubt. Conversely, space, matter and time are incremental, flexible and changing in the internal world of information processing – as we shall see.

Information Time vs. Physical Time

External Time is Non-elastic & Objective; Internal Time is Elastic & Subjective.

Let us begin our discussion with the concept of time.

The physical universe is entirely external, while the digestion of our information universe is entirely internal. Matter moves through external space over external time, while data moves through internal space over internal time, as we shall discuss. External time is non-elastic according to the laws of the Newtonian Universe. We have clocks and other measuring devices to remind us of this absolute objective time.

Objective External Time created by crosschecking Data Streams

Physical time, although objective, is a construct that we assign to our world. This notion allows us to more effectively organize and respond to data input from our environment. This organization is crucial for survival. We merge and crosscheck the information from multiple environmental data streams to validate the objectivity of time. Yet none of these information flows have any concrete reference point with regards to time. Each data stream is just a string of numbers, quantities, or information, which the organism processes sequentially. The information from multiple data streams is merged into composite data streams. Time is read into this process.

The Necessity of Segmented Data Streams

Internal Time elastic and subjective

In contrast to the relative objectivity of external time, internal time is elastic and subjective. This is due to the way in which our information matrix operates. The information matrix is meant to glean meaning from sensory input derived from our environment. This task is accomplished by organizing or crunching the information into a useable form.

Continuous Info Flow broken into Discrete Chunks for 2 Reasons.

The data stream itself is the first step in the organization of the continuous flow of environmental input. This continuous flow must be broken into discrete chunks (the data stream increments). This segmentation is required for two primary reasons. 1) Organizing the information flow as a string of data gives the organism an opportunity to respond to environmental stimuli. 2) The information matrix requires data in a segmented form to better digest and analyze the sensory input. Both of these features are crucial for survival.

Data Stream's Discrete Chunks called Increments.

The data stream chunks are not exclusively digital, as they cannot necessarily be broken down into electronic ones and zeros. These segments are not exclusively quanta, as they are not necessarily composed of elementary particles such as photons, although they can be. Increment is a more general term that describes information that can be digital, quantized, or of a more general bunchiness. Accordingly, 'increments' is the name we give to these discrete chunks.

The Feedback Loop

Data stream time subjective because there is no objective way of measuring increments.

Breaking the continuous flow of environmental information into increments (discrete data points) produces a data stream time that is both elastic and subjective. This is due to a few inherent reasons. On the most primary level, each pulse of information is not measurable in any objective way. This is because there are no absolute reference points to measure the increments against. Because there is no absolute reference point, data stream time is subjective.

Interactive Feedback loop between two data streams crucial for survival

The reason data stream time is elastic has to do with the feedback loop that is inherent to living systems and their information matrix. A noteworthy aspect of Life's monitor/adjust feedback process is that there are always two interactive data streams – not just input, but output as well. The external stimulus enters the organism’s processing system. The organism digests this info and then responds accordingly. The organism monitors the next piece of info and then makes an appropriate adjustment. Breaking the continuous flow into discrete chunks is an essential feature of every living system. Essentially this process incrementalizes analog information – similarly to when live analog music is converted into a digital CD. This requirement provides the organism, whether single cell or complex human, with an opportunity to respond appropriately to environmental stimulus. This feature of information processing is the feedback loop.

Speed of Feedback Loop is Variable

To enable the stimulus/response mechanism that is essential to the survival of living systems, the continuous flow of information is broken into increments – manageable parts. The number of parts per section determines the speed of the feedback loop. The speed of the feedback loop determines crucial response time. The greater the speed, the quicker the response time. In a similar fashion, the greater the number of dots per inch (DPI) the higher the resolution of the image. When we are alert, the speed of the feedback loop is faster. When we are groggy or under the influence of alcohol, the speed is slower. This slowdown of the feedback loop impairs our ability to drive safely. Perhaps when we are under the influence of psychedelics the feedback loop is so fast that it distorts our perception of ‘normal’ reality. It is evident that the speed of the feedback loop is variable – depending upon many different external conditions.

Speed of the feedback loop an evolutionary factor

As an aside, some scientists maintain that the relative speed of Life's feedback loop is a factor behind evolution and intelligence. The quicker the environmental information is digested and responded to, the more likely the organism will survive. The speed of the feedback loop determines response time, whether a basketball player, debater, or microscopic organism. The speed of the feedback loop is a factor that determines the crucial response time. As such, a quicker feedback loop provides an evolutionary advantage to the organism. This feedback loop is partially a function of how rapidly the information is processed.

Speed of feedback loop determines Data Stream Time

As mentioned at the beginning of this section, data moves through internal space over internal time. The duration between each data stream increment (data point) determines the speed of the feedback loop. The shorter the time interval between each data point; the shorter the feedback loop; and the quicker the response time. Vice versa, long feedback loop; slow response time.

Conscious Control over Feedback Loop imparts Elasticity, Subjectivity to Data Stream Time

It is entirely plausible that the organism has some control over the feedback process, albeit subconscious. In other words, we speed up and slow down our feedback loop according to circumstances. In fact, the effort of concentrating could, in part, be a conscious effort to speed up our feedback loop. If the feedback loop is too slow, material will not be understood. It’s evident that humans, at least, have the presumed ability to stretch and shrink the feedback loop to respond more flexibly to environmental circumstances. The flexible nature of the feedback loop imparts additional elasticity to data stream time. This elasticity enables a flexible response. It also adds another layer of subjectivity to a data stream’s internal time.

Time is the same; Interaction determines difference

The foregoing discussion might seem to imply that we are speaking about two types of time. We are not. Instead, the type of interaction determines the way time is perceived. Quoting the prior article:

"The physical and information matrices interact with time in different ways. Interaction between the physical world and time is analog (continuous). Because a stream of information is necessarily comprised of distinct data points for digestion purposes, the interaction between an information digesting system and ‘time’ is segmented in nature. Time is the same in both systems. It is the way that the particular system interacts with time that creates the difference. Because living systems must digest data streams to survive, their information processing system also requires a segmented interaction with time."

Subjective Data Streams merged to create Objective Physical Time

Reiterating for memory: The continuous flow of environmental information must be transform into segmented data streams. This segmentation facilitates the digestion of information and enables a give-and-take relationship with our surroundings. This segmentation also creates an internal information time which is both subjective and elastic. However the merger of these subjective data streams creates the notion of objective external time through the redundancy of crosschecking.

The Explosion of Time to include Objective Physical Time and Subjective Info Time

Now that we have exploded the notion of time to include both the internal time of information flow and the external time of our physical universe, we are going to explode the notion of space to include both internal space and external space. Space is another of the foundational Newtonian constructs that must be ‘exploded’ to bridge Matter and Life. (See Newtonian Constructs Exploded.)

Information Space vs. Physical Space

Matter Changes External Location – Information Digester perceives from Fixed Internal Location

Newton’s Universe of Matter is entirely external. In this context, the movement of matter through space is measured as the distance between external locations. Conversely, the Universe of Information Processing is completely internal. No matter how far or fast we move externally, the organism digests information internally from the fixed location of the brain.

External world is just a theory developed by our Information Digester

Due to the fact that our position as a data processor is fixed, external space only exists as a theory. The notion of space, like time, is an effective way of organizing and responding to the ongoing flow of data. However, while matter moves through external space over time, data moves through internal space over internal time. Although the information comes from dynamic external sources that exist in the space-time continuum, the data is processed from within our own Body/Mind.

Biological Information Processing determines our perceptions

This essential separation from external material reality is the root of the Buddhist/Hindu notion that Reality is an illusion created by Consciousness. This analysis is not meant to cast doubt on the notion of an objective external reality. It is merely asserting the obvious: how we process information determines our perception of the external world, including the notions of space and time.

Our Brains process a dynamic flow of Information.

The sole location of information processing is in the brain. The data that we process is inherently dynamic. We translate dynamic sensory data streams into the notion of a dynamic external world. This theory is constantly affirmed by our give and take relationship with our surroundings. Because of this continuous affirmation, we presume the world to be real. In this fashion we are all scientists, collecting sensory data, digesting it, forming theories, and then testing them. This process begins with birth – when the flood of information begins bombarding our senses. This analysis could be called a truism – indisputable, as it were.

Data Stream Parameters determine Dimensions of Info Space

Each of our senses can only perceive a particular type of data stream. For instance, eyes can see and ears can hear, but ears can’t see. Further, our perception of the incoming data stream has certain limits due to the sensitivity range of our senses. As an example, the eyes only process a limited range of the full electro-magnetic spectrum. The ears can only perceive a limited range of frequencies. For instance, dogs and bats can hear certain frequencies that are inaccessible to the human ear. Accordingly, each sensory data stream can only have a certain range of values. The perceptive limits of an organism determine this range. This range of values is what we will call information space.

Composite Data Streams Predominate

Composite Data Streams create Notion of external, objective Reality

Composite data streams create the notion of an ‘objective’ reality. Each of these composite data streams is comprised of a confluence of numerous raw data streams. These streams lose their identity when they are merged. The end result of the merger is the integration of the individual parts into a composite whole. This integrated information is the basis of what we perceive as objective reality.

Example: Notion of Space requires Info from both Eyes & Ears.

For instance, the digested sensory information from one ear or one eye, alone, is not enough to create the notion of depth. To experience reality in 3 dimensions plus time, it is necessary to merge the sensory information from both eyes and both ears. The raw data streams that are merged to create the notion of an ‘objective’ reality are independent from the constructs of space, time, and matter. The ability to perceive space, time and matter is only possible by merging data streams.

Redundancy for Accuracy

The question arises: How is it possible to generate an objective space and time from data streams that have subjective parameters?

Our Brain employs the digested information from the five senses as a form of crosschecking to further solidify and stabilize our notion of reality. This technique could be called 'multiple forms of redundancy to establish accuracy'. For instance, the digested data streams from our eyes, ears, skin, and nose are compared against each other to create a consistent perception of our world. To see how essential this crosschecking is, think how disorienting it is when just one ear is clogged up. One redundancy channel is blocked and our space-time perspective is unbalanced.

Raw Data Streams create internal, subjective reality

Merging raw data streams into composite data streams is an essential method by which living systems organize environmental information. Besides creating the notion of an objective external reality, composite data streams are also employed to organize subjective internal reality.

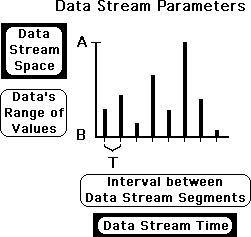

Chart: Data Stream Space & Time – Parameters

Thus far we have only tossed words at the unusual notions of data stream space and time. Let us reinforce this verbal understanding with a chart.

X-axis = Time, Y-axis = Space

The parameters of the data stream determine the limits of data stream space and time. In the graph at the right, we picture a raw data stream. The vertical y-axis determines the location of the data in data stream space, and the horizontal x-axis determines data stream time. Let's see how.

Data Stream Time intervals comes in Increments

Notice how the data stream comes in chunks (increments). T, the time interval between each increment, determines data stream time. Let us suppose that we take a reading every minute (T = 1 minute). If there are 8 increments, the data stream exists over 8 minutes of time. Yet it doesn't exist in time in a continuous fashion as does matter, but in distinct chunks instead.

Range of Data Stream determines Limits of Data Stream Space

The range of a particular data stream determines the limits of that data stream space. In this situation the data stream can only assume values between A and B (B ≤ Data ≤ A). Our data stream exists in this space. In the case of the binary data streams that we have been studying, the limits are 1 and 0.

Data Stream Space has endless variety; only one infinite Physical Space

Each data stream has its own unique limits. These are determined individually by each data source. As a result, data stream space can come in almost an unlimited variety of forms each with their own unique range. This includes both sensory and composite data streams. In contrast, space in our atomic physical universe is unitary. This unitary space is infinite, in the sense that it has no boundaries.

Movement through Space & Time requires Sense of Identity

Because Raw Data has no connectivity, it does not move through Time.

The reason we can talk about an object moving through space and time is because the object retains a sense of permanent identity. For instance, we are the same person at the beginning and end of a journey. This analysis doesn't apply to an undigested data stream. Nothing links the individual data points except perhaps the idea that each point belongs to the same stream. The first and last data points in the data stream are unrelated. Accordingly it makes no sense to say that the data points in an undigested stream move over space and time. If no mechanism connects the first and last data points, there is no movement.

Living Algorithm Digestive System provides connectivity to a Data Stream.

As we've seen in prior articles, one of the primary functions of the Living Algorithm's digestive system is relating data points to each other. Once data enters the system, its impact erodes, but never disappears. This connectivity creates a sense of permanent identity in the data stream. To make sense of this abstract gibberish, let's examine a concrete example.

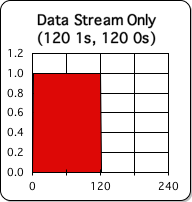

Binary Data Stream does not move through Time

The data stream that generates the graph at the right consists of 120 1s followed by 120 0s. Accordingly, the limits of the data stream space are 1 and 0. If the duration between each segment is one minute, it would take 240 minutes (2 hours) to complete the graph. However, neither the red block of 1s, nor the invisible block of zeros moves through time; they just are. It makes no sense to talk about motion.

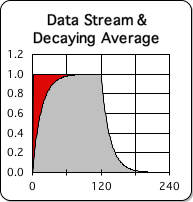

Living Algorithm's Living Average moves through Time

The second graph at the right illustrates what happens when the Living Algorithm digests this data stream. The grey shape in the foreground is a visualization of the digested data stream (the Living Average), while the red block of 1s (the Raw Data) is relegated to the background. Because the beginning and end of the data stream are connected via the digestion process, it makes sense to say that the digested data stream moves through time.

Living Average takes a round trip – from 0 to 1 to 0

Specifically, the Living Average moves from 0 to 1 (one unit) in 120 time intervals (2 hours). Because the distance traveled is one unit and the elapsed time is 120 minutes, one could say that the velocity for the first two hours of the graph is 1 unit per 2 hours or 1/2 unit per hour. Conversely, during the second 120 minutes, the data stream travels from 1 to 0. Employing the same reasoning, the velocity of the data stream in the second half of the graph is also 1/2 unit/hour, but in the opposite direction. In other words, this is a round trip ticket. The data stream begins and ends at zero, but traverses 2 units to get there.

Living Average indicates location in Space and Time. Comparing Locations yields rates of change.

Accordingly, the Living Average indicates the data stream's location in space at any point in time. For instance, when the time measurement is 30 (the beginning), the Living Average is about 0.6. We also call the location the ‘State of the System’ – where the system is located. Because the Living Average indicates location at a specific moment in time, we can compare locations at different points in time to determine rates of change, like velocity and acceleration. Determining rates of change is not possible with a raw data stream.

Data Stream Space & Time = Discontinuous; Atomic Space & Time = Continuous

There is another point worth noting. Although the graph looks continuous, it is broken into discrete increments – as in the Data Stream Parameter graph. All data streams consist of discrete chunks. As mentioned, an organism must break the continuous flow of environmental information into data streams for the purposes of digestion and response. Therefore, the data stream's Living Average moves through space and time in a discontinuous fashion. In contrast, physical space and time in our atomic world is continuous. The continuous equations of traditional Physics (Mechanics) defines this space and time almost perfectly. Data stream space and time are discontinuous, while space and time in our everyday atomic world are continuous.

Opening & Closing Formal Systems: a standard process in the Scientific Enterprise

In this article, we have noted that space and time must be 'exploded' to bridge Matter and Life. Why? The traditional notions of these fundamental constructs create a closed system of dynamics that describes the behavior of the material universe almost exactly. However, this closed system has not been able to encompass the behavior of living matter - despite a tremendous effort by many brilliant minds. The Living Algorithm System opens up this closed system of dynamics by 'exploding' traditional concepts. The traditional symbolic metaphor must be expanded to encompass a different slice of reality. This opening up of a closed system to embrace a different slice of reality is nothing new. In fact, this opening and closing process characterizes the scientific enterprise. Let's see what the esteemed Jacob Bronowski has to say about this issue.

"Science is an attempt to represent the known world as a closed system with a perfect formalism. Scientific discovery is a constant maverick process of breaking out at the ends of the system and opening it up again and then hastily closing it after you have done your particular piece of work. … It is in the nature of all symbolic systems that they can only remain closed as long as you attempt to say nothing with them which was not already contained in all the experimental work that you had done. If you want a closed system (which is what Newton's contemporaries hoped that he had achieved), then you must believe that the whole world has now been described and that everything else is just a kind of trivial embroidery. … What distinguishes science is that it is a systematic attempt to establish closed systems one after another. But all fundamental scientific discovery opens the system again. The symbolism of the language is found to be richer than had been supposed. New connections are discovered. The symbolism has to be broadened." (Bronowsky, The Origins of Knowledge and Imagination, p. 108)

Summary

Despite fundamental differences, Matter & Info Digestion have a common architecture of dynamics.

As seen, a digested data stream moves through space over time. The Living Average indicates the location of the data stream at a specific point in time. By comparing locations we can determine rates of change. Similarly we can compare the locations of matter as it moves through space over time to determine its rate of change. Determining rates of change is the foundation of any system of dynamics. As we shall see, both systems, material and informational, have a common underlying struture – the architecture of dynamics. Space and time, as they pertain to data streams and matter, have certain commonalities, but also have significant differences.

Informational measures variable & subjective; Material measures fixed & objective.

Let us summarize the differences. Each data stream inhabits a unique informational space. The data stream parameters determine the dimensions of this info space. It is neither objective, nor fixed, nor continuous. Similarly, info time is elastic, subjective, and discontinuous, not objective in any way. These characteristics are due to the variable lengths of the stimulus/response feedback loops essential to an organism’s survival. In contrast, both space and time are objective and continuous in our atomic Universe.

Information from Subjective Internal World basis of our Notion of Objective External World.

As we have discussed, each data stream determines a variable and subjective space and time. The parameters of info space and time are based upon the parameters of the data stream they belong to. For instance, the variable feedback loop can be a factor that determines the length of the time increments and the data stream’s range can be based upon sensory limits. As is evident, the concepts of space and time are far more flexible and subjective in the internal world of information processing, than they are in the external world of matter. This is true although the internal info world creates the very notion of an external material world. Despite the subjective root of objectivity, we can be confident in its accuracy due to the redundancy of crosschecking input from multiple sensory sources.

Despite subjectivity, the Living Algorithm's Mathematical Mechanisms inform our world in amazing ways.

With such subjectivity, variability and elasticity in these crucial measures, what utility could they possibly have?

Despite the subjectivity of space and time in the Living Algorithm System, the mathematical mechanisms by which we digest information continually seem to influence our human world. There is an overwhelming amount of external evidence, both biological and cultural, that supports the theoretical claims. The forms of data processing both predict our need to sleep and correspond with our internal biology with regards sleep, as discussed in Book 1. The same mechanisms also predict the well-documented third drive, internal motivation, whose root is autonomy. Some scientists even claim that humans must fulfill this basic need in order to be happy, productive and motivated. Others claim it is a necessary prerequisite for the optimal experience, the Flow.

What is the Relevance of Info Space & Time?

We’ve established that the notions of space and time must be ‘exploded’ to encompass the both the world matter and info digestion A nagging question emerges spontaneously: What is the utility in ’exploding’ the notions of space and time to encompass these two entirely different systems? What is the relevance of Info Space and Time?

Common System of Dynamics provides Causal Linkage between Living Algorithm System & Behavioral Reality.

The brilliant insights of Newton et al. into the dynamics of atomic matter in the eternal world, apply equally to the dynamics of data streams in the internal world of biological information digesting. (This concept is developed in the current series of articles). Accordingly, the constructs of Newton's traditional Physics (momentum, force, work, power, and energy) also apply to internal data processing. The construct of Info Power is one of the most complex constructs, as it is a composite of more fundamental constructs, such as space and time. Applying the notion of Info Power to the Living Algorithm System enables us to provide a plausible causal connection between experimentally verified human behavior and the Living Algorithm System. Without the notion of Info Power, the correlations are only associative. As such, we stand directly and consciously on Newton’s shoulders, employing his powerful constructs to both explain and extend our insights into the behavioral dynamics of information digestion. These insights would not have occurred otherwise. This is why exploded Newtonian constructs bridge the universes of matter and life.

What is Data Stream Mass, the 3rd fundamental construct of dynamics?

The complex notions of Space, Time, and Mass provide the foundations for the architecture of Newtonian dynamics. In the current article we explored the meaning of Data Stream Space & Time in relation to Physical Space and Time. In the next article we will explore the third fundamental construct – Data Stream Mass.

It is easy to understand Mass in the context of the physical world. Mass is the substance of our world. Experientially it is anything that provides some kind of resistance to pressure. But what does it mean for a data stream to have mass? And if a data stream has mass, is it the same as physical mass? If not, what are the parallels and differences between this essential construct in the two systems? For some answers to these questions read the next article in the stream – Data Stream Mass.