- 'Experience’ in the context of Information Dynamics

- Neurological Evidence for the Importance of Timed Repetition

- Interruptions block Experience

'Experience’ in the context of Information Dynamics

'Experience' in the context of Information Dynamics has a strictly mathematical meaning, as does Attention. What does this consist of?

100% Energy Density required for an Experience

In the prior article, Attention: a Filter of Random Signals, we showed that an uninterrupted data stream of sufficient length has an acceleration that rises and falls naturally. We’ve deemed this the Pulse of Attention. If the string of data lasts long enough for the pulse to complete itself, the energy density of the stream approaches 100%. We theorize that complete density is required to enable living systems to ‘experience’ the data stream’s pulse of information. ‘Experiencing’ a data stream is akin to integrating the information stream into our cognitive network. This process could entail the firing of a neuron.

Life experiences Data Stream when Attention cycle is Complete.

In terms of our example, if Attention transmits the data stream info for a sufficient duration, the squirrel is able to 'experience' the data. Perhaps a neuron is fired simultaneously with other neurons and the squirrel registers a preliminary memory trace of 'dark' from the light flickering through the forest. Once the squirrel has experienced (integrated) the data stream's information, it can now make a decision as how to respond. This could be to gather more information, run, eat, or have sex.

Experience in terms of Instants & Moments

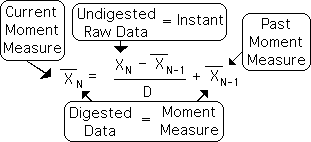

Let's reframe this discussion in terms of some earlier constructs of the Living Algorithm System – instants and moments. Instants consist of raw data. The Living Algorithm digests instants, turning them into ‘moments’. These moments are measures of how the data stream changes over time. They consist of the data stream’s rates of change (the derivatives). This relationship is seen in the algebra of the Living Algorithm.

An Experience occurs when Data Stream Density ≈ 100%

A sustained data stream generates a pulse of acceleration that rises and falls. Conversely, the acceleration of a random data stream never gets off the ground. A living organism, such as our squirrel, 'experiences' a data stream when a series of uninterrupted 'moments' is sufficient to complete the pulse. The number of moments required to achieve an 'experience' is determined by the data stream density. If the data stream density hovers around 0%, as in a random data stream, there is no 'experience'. An 'experience' occurs when the data stream density reaches 'practical' 100%. However, Life must pay Attention to a consistent data stream for a sufficient duration for the density to reach completion (100%). Instants are digested by the Living Algorithm to create moments. Only if Life focuses the mental energy of Attention upon a sufficient series of moments do they become an experience. These are the necessary conditions for an organism to ‘experience’ the data stream’s information.

![]()

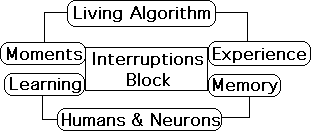

Interruptions can kill an Experience

As the data stream's range of variation (the Deviation) approaches zero, simultaneously the data stream density approaches 100%. Deviation down; Density up; and vice versa. Interruptions in mental energy that is channeled through Attention disrupts the momentum of the data stream. These interruptions to the data stream cause the Deviation to rise and the Density to drop. This is why interruptions can eliminate the possibility of a complete 'experience'.

Neurological Evidence for the Importance of Timed Repetition

The Importance of Repetition in creating Life Experience

As we have discussed, the Living Algorithm digests information to reveal the rates of change (the derivatives) of a data stream. One of these derivatives is the data stream's acceleration. If the information in a data stream is sustained for a sufficient duration, the acceleration rises and then falls. This is the Pulse of Attention. At the end of this pulse, the energy density of the data stream approaches 100%. According to our model, living systems experience (integrate) the information in a data stream as the energy density approaches completion. However, the energy density only approaches 100% if the data in the stream is repeated enough times. Interruptions can diminish the density of a pulse. In other words, repetition is the key to turning moments into a living experience. Further, this repetition must be relatively regular and uninterrupted to achieve the necessary energy density to create an experience. Is there any evidence that supports this interpretation?

Scientific Literature: specifically timed repetitions for permanent learning

Quoting Dr. Medina’s invaluable brain rules,

”Memory may not be fixed at the moment of learning, but repetitions, doled out in specifically timed intervals, is a fixative.” (p. 130)

This is an introductory sentence to a 9-page section in his book on the importance of repetition for learning. Dr. Medina provides an abundance of evidence on both neurological and biological levels to support and refine his claim. Following are just a few of many examples.

”Ebbinghaus showed the power of repetition in exhaustive detail almost 100 years ago. … He demonstrated the [memory] loss could be lessened by deliberate repetitions. This notion of timing in the midst of re-exposure is critical.” (brain rules, p. 131)

Specifically timed repetitions for memory

Medina goes on to explore the importance of timed repetition for both humans and neurons. He first addresses human learning.

“Repeated exposure to information in specifically timed intervals provides the most powerful way to fix memory into the brain.” (brain rules, p. 132)

Specifically timed repetitions for information transfer between Neurons

He then discusses the importance of repetition for memory and learning on the neurological level. One hypocampal neuron is attempting to teach the other a lesson in a Petri dish. If there is only one lesson, the student neuron resets itself to zero after 90 minutes – as if nothing had occurred.

“If the signal is only given once by the cellular teacher, the excitement will be experienced by the cellular student only transiently. But if the information is repeatedly pulsed in discretely timed intervals (the timing for cells in a dish is about 10 minutes between pulses, done a total of three times) the relationship between the teacher neuron and the student neuron begins to change.” (brain rules, p. 135)

The student neuron shows increasingly greater excitement for increasingly smaller input. Medina concludes the paragraph,

“Even in this tiny, isolated world of two neurons, timed repetition is deeply involved in whether or not learning will occur.” (brain rules, p. 135)

Living Algorithm, Humans & Neurons: Timed Repetition transforms the Transitory into the Permanent.

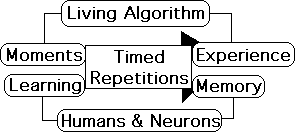

Just as timed repetitions are required to make a permanent change in a neuron, repetitions are also needed to transform a lesson into a permanent memory and for a sequence of moments to become into an experience. In other words, the Living Algorithm, humans and neurons require 'timed repetition' to change the transitory into the permanent.

Repetition for a neural firing: Repeated neural firing for a permanent memory

The Author suggests that timed repetitions are also required to fire a single neuron (developed more fully elsewhere). According to the model, the data stream’s energy density must reach 100% before it can spark the gap between mental and physical energy. This spark is required to fire a neuron. The only way the data stream’s density can reach 100% is through a sequence of timed repetitions. Just as multiple repetitions are required to fire a neuron, this experience, the neural firing, it takes a series of these neural firings to register a permanent memory. This memory allows the organism to recognize a potentially significant event.

Interruptions block Experience

Neurological Interruptions "block memory formation in total".

Scientists are able to determine when the learning process is complete. Interruptions of any kind abort this process.

“The interval required for synaptic consolidation is measured in minutes and hours … Any manipulation – behavioral, pharmaceutical, or genetic – that interferes with any part of this developing relationship will block memory formation in total.” (brain rules, p. 135)

Our model: the Life/Attention ignores random data streams – no repetition

Let’s interpret this process from the perspective of our model. As mentioned in our prior article, Attention filters out random data streams. This prevents information overload for biological systems. Without the necessary repetitions, Attention assumes that the data stream is random and ignores it. Accordingly, Life does not experience a random data stream. Life only experiences organized data streams – ones in which information is repeated. Similarly, the neuron does not register a change unless a signal is repeated regularly. If the signal is not repeated, the organism assumes that the signal is random, hence not worth storing as a memory. It seems that interruptions block the formation of a memory/experience in humans, neurons, and the Living Algorithm's mathematical system of information digestion.

Data Stream Interruptions damp energy density, thereby killing the experience.

Does this analysis concerning session interruptions evoke any subconscious pattern recognition? Let’s attempt to bring it to consciousness. Does the term Interruption Phenomenon ring a bell? Let’s reframe the earlier Creative Pulse study in the current context. In this study we saw that interruptions diminished the quality of a productive session. As mentioned, interruptions to a flow of information also damp the energy density of the data stream. Could it be that a diminished energy density could also have a negative effect upon a productive session? Is this the reason that interruptions have a negative impact upon cognitive performance?

Is the scientific evidence supporting repetition just random clustering?

Is this yet another parallel between biological systems and the Living Algorithm System? Or are we just imagining things? Could all this evidence be circumstantial? Is all this verbiage merely barroom speculation, with no more substance than the morning fog? Are these correspondences perhaps just another in the mounting chain of coincidences, based upon some confounding variable, or more evidence of random clustering? Does this repetition process apply to any other feature of living systems. We certainly don't know.

For some more speculation, check out the next article in the stream – The Biological Hierarchy.