- Probability: from Computational Tool to Theoretical Necessity

- Probability’s Ascendance to Ruler of the Subatomic Realm

- Probability's uncertainty at the heart of Subatomic, hence Physical, Universe

- Embracing inherent Paradox & Ambiguity

- Mechanics & Probability wedded as Modern Physics

- Probability paves the way for the Living Algorithm.

- Living Algorithm Speciality: Dynamic Relationships between Moments

Probability: from Computational Tool to Theoretical Necessity

Mechanics reigns supreme at the end of the 19th century

At the end of the 19th century, the continuous equations of Mechanics (traditional Physics) reigned supreme. Many believed that Mechanics had uncovered all the universal laws of matter. It was recommended that young men pursue a different line of research because this field was exhausted. These continuous equations, which delineated the universal laws of Newton’s mechanics, could accurately account for the behavior of matter down to the atomic level. The subatomic realm, which was to turn everything upside down, had yet to be revealed.

Explanatory Power of Continuous Equations imply that Space and Time, Cause and Effect are unbroken

Due to their explanatory power, it was thought that these continuous equations accurately reflected the nature of Matter, the Universal Substance. Some of the more enthusiastic claimed, and some still claim, that this power also extends to Life, the form of matter that is alive. The implications of these continuous equations are basic. Space and Time are continuous and distinct. Cause and Effect is an unbroken, instantaneous, automatic affair. Once the appropriate starting point (initial conditions) has been established, the appropriate equation, which had already been derived, could accurately determine all future moments with great precision. In other words, the Universal Fabric had no tears or discontinuities. Everything moved as if it were some giant clock. It was a comforting, feel-good perspective on the nature of reality. All behavior conforms to precise laws. No paradox or ambiguity. Nothing unknown. Everything has a scientific explanation.

Probability’s credentials still dubious because of inability to accurately predict the next moment in time

At this point in history (the late 1800s) Probability was just a supporting actor. Just recently admitted to the exclusive Science Circle, his credentials were a bit dubious. Probability was still associated with gambling and its unpredictable laws of chance. Employing Gaussian distributions (the normal curve), Probability could accurately predict long-term patterns. This was his scientific utility. However, he could not determine what would happen in the next throw of the dice. Because of his inability to make firm predictions, Mechanics looked down on Probability as a messy or wishy-washy mathematics.

Mechanics needs Probability to compute atomic interactions

As long as Mechanics was describing planetary position or the trajectories of cannon balls, he was on solid ground. The situation changed as ordinary sized objects turned into collections of atoms and molecules. The computations were too overwhelming for the simplified version of reality supplied by Mechanics. He had to rely on Probability to perform the computations regarding the atomic universe. This was a natural progression for Probability, whose specialty is characterizing the features of fixed data sets. He had dealt effectively with data sets of identical dice or coins. Probability could apply these same talents to identical atoms and molecules. Thermodynamics was the first to employ Probability’s talents extensively to precisely predict the behavior of hundreds of millions of atoms and molecules. Probability became the computational tool of choice when dealing with material systems containing quadrillions of equally weighted elements. He is able to make exceptionally precise, practically exact, predictions about the behavior of gases. For instance, Probability’s measures enable scientists to make miraculously accurate statements about the behavior of oxygen molecules when they are heated.

Probability employed as a practical, not theoretical, necessity.

Probabilistic uncertainty, of course, flew in the face of the deterministic worldview of Mechanics where everything could be predicted. Yet the contradiction was easily resolved. If the positions and trajectories (the initial conditions) of all the atoms and molecules could be accurately mapped out, Mechanics could determine the next throw of the atomic dice. Probability’s talents had just been employed as a form of approximation – a computational necessity due to the sheer number of atomic particles in the process. Probability was not yet a philosophical necessity. In theory it was certainly possible to calculate the behavior of the atomic particles with the laws and principles of Newtonian dynamics. Probability was only necessary to make the calculations possible, not for theoretical purposes. He was just a supporting actor – a mere computational tool with no other significance.

Mechanics: “Probability just a computational tool, not a reflection of reality”

“Employing Probability is merely a practical convenience,” Mechanics confidently asserted. “This reliance on Probability in no way impacts our view of an orderly, continuous universe where everything is predetermined. My system describes the natural order perfectly, so my equations still reign supreme. There is no place in my system for Probability’s uncertainty.” At this stage in history scientists could still envision a world that consisted of distinct particles and waves automatically and continuously interacting with each other. Then came the electron.

Probability’s ascendance to Ruler of the Subatomic Realm

Electrons: Atoms of Electricity?

In the late 1800s the scientific community had good reason to believe their quest to uncover the elemental building blocks of the Universe was at an end. Most of the experimental evidence of the time indicated that atoms are the fundamental particles underlying material existence. The prevailing view was that these atoms were indivisible particles - microscopic grains of sand. When J.J. Thomson uncovered evidence for the electron, many considered it to be the 'atom of electricity'.

Electrons: Tiny particles that orbit the nucleus of an atom?

Rutherford, Thomson's student, presented evidence indicating that electrons, instead of being a type of atom, are tiny particles that orbit the nucleus of an atom. Under this view the 'indivisible atom', the fundamental particle, is more space than matter. These findings flew in the face of the current atomic paradigm and catalyzed a break with Thomson. Everyone was looking for a tiny brick with which the universe could be constructed. Instead of a microscopic particle, there was this entity that was filled with emptiness - hardly the candidate they were looking for. In contrast, Rutherford and his followers embraced the electron and proton as the latest indivisible particles. This viewpoint was congruent with traditional thinking. Space was still continuous. Waves and particles were still separate.

Electrons: Quanta?

Neils Bohr, student of both Thomson and Rutherford, introduced quantum theory into the mix. Much of evidence concerning the electron came from analyzing the spectrum of light that is given off by a hydrogen atom. One of the first conclusions was that a moving electron gives off light. On closer examination it was found that the spectrum of light given off by the electron was bunchy rather than continuous. There were inexplicable gaps in the fabric. Bohr came up with a purely mathematical explanation for this phenomenon by employing Plank's energy quanta. The model suggested that electrons could only be in distinct orbits, but nowhere in-between. Although the model fit the precise data perfectly, the theory behind the model satisfied no one, including Bohr. It implied that space, rather being continuous, was actually discontinuous, at least beneath the surface of the atom – on the subatomic level. Then came World War I.

Electrons: Not Particles, but Waves?

After the war, a primary focus of young Physicists was to resolve this heresy against Mechanics in a traditional manner. Everyone, including Bohr, was convinced that electrons were microscopic particles moving through a continuous space and giving off light waves. There must be a novel perspective, as yet hidden, that will resolve the paradox of quantized space. The supposed solution came from a surprising direction. To resolve the inconsistencies with this perspective, Schrödinger derived his famous equation that defined electrons instead as waves. This solution was radical, but still hadn't challenged traditional notions of a continuous space and a single truth.

Electrons: Probability Waves?

While his equation fit the increasingly precise data concerning electrons, hypothetical extensions of Schrödinger’s equation led to some strange and impossible results. For instance, when certain conditions were introduced into the equation, atoms expanded to the size of the Pentagon. To resolve this seemingly paradoxical situation, Max Born successfully applied probability theory to the problem. His solution indicated that the motion and position of the electron could be more accurately characterized as a probability wave. Improbable as the solution seemed, Born’s probability insight resolved the mathematical difficulties introduced by Schrödinger’s equation and fit the hard data. But this resolution implies that the world on the outside of the atom is essentially different than that the inside of the atom - the one continuous and certain, the other discontinuous and ambiguous – a Quantized and Probabilistic Universe.

Heisenberg’s Uncertainty Principle: Precise Position or Dynamics, not both

Born’s solution, while it fit the facts, didn’t resolve the question of why some experimental results suggested that the electron was a wave and that others suggested that it was a particle. Exploring the mathematical inferences of quantum theory, Heisenberg, Bohr's student, derived his famous Uncertainty Principle. An electron's static position or dynamic movement can be measured precisely, but not both. This suggested that an electron was either a wave or a particle depending upon the mode of observation. A simplistic version of this philosophical earthquake maintains that subjectivity is a factor in observation due to inescapable mathematical constraints.

Probability: the Ruler of the Subatomic Universe

The insights of Born and Heisenberg moved Probability to center stage in the quest to understand the essential nature of the Subatomic Universe. Richard Feynman’s insights into Quantum Electrodynamics sealed Probability’s position as Ruler of Subatomic Particles. Feynman’s insight was even more counter-intuitive. His formulas allowed scientists to calculate the behavior of subatomic matter to unbelievable levels of precision. However, the basis for these equations considered that subatomic particles moved in all possible directions simultaneously, including forward and backward in time. His computations revealed which of these directions wasn’t canceled out by contrary motion. Scientists now had to take all possible directions into account to make this probabilistic computation.

Probability's uncertainty at the heart of Subatomic, hence Physical, Universe

Probability can't make definitive statements about individual moments

Probability's rise to preeminence was not without controversy. If the insights were true, it was now essential to think of the Universe in a probabilistic and paradoxical fashion. Due to Probability's innate nature, subatomic particle/waves weren't predictable on the individual level. Although Probability can make incredibly precise statements regarding large groups of electrons, he cannot predict the behavior of an individual electron or photon. He has a hard time relating to individuals (probably an Aquarius). A single photon, like a single coin, can go heads or tails with each flip of the experimental coin. The single photon's eventual resting place is just as random as flipping a coin. The results concerning a group can be predicted extremely well, while the individual not at all. Probability's precisely defined predictions break down when applied to individual bits of eternal matter.

Subatomics are both Waves and Particles

Further, a direct implication of the Heisenberg Uncertainty Principle is that electrons and photons have no absolute identity. The observer determines whether the subatomic particle is a wave or particle. The mode of perception determines the answer. There is no definitive answer as to the electron’s ultimate nature. It is solely dependent upon the observer. The question determines the answer. There is no absolute truth. These solutions placed uncertainty at the heart of the subatomic, hence the physical world.

Nobel Prize winners stand in opposition to Probability's inherent Uncertainty

To indicate how revolutionary the implications of these scientific findings were, a group of scientists, including Nobel Prize winners Schrödinger & Einstein, immediately came out in opposition – claiming that there was still an absolute truth out there just waiting to be discovered, which would resolve this core uncertainty. Appalled at this turn of events, Einstein made his famous statement, “God doesn’t play dice”, and spent a considerable amount of his prodigious mental capabilities in the unsuccessful attempt to resolve the ambiguity that now lay at the very heart of Physics. In essence, this prestigious group was defending the absolute theoretical and philosophical eminence of Mechanics from this internal revolution by the adherents of Probability. Certainty was under attack and they were employing all the analytic tools at their disposal to prop up the falling empire.

Embracing inherent Paradox & Ambiguity

Born's principle of complementarity: Subatomics are both Wave & Particle

Another group of Physicists led by Neils Bohr embraced Probability's inherent uncertainty and even attempted to enshrine paradox and ambiguity as inherent aspects of existence. Bohr had the credentials to lead the revolution, as he initiated the whole mess when he successfully applied quantum theory to the subatomic world. In Copenhagen 1928 he presented his principle of complementarity. He postulated that for subatomic theory to be complete, electrons and photons must be viewed as both waves and particles, even though they were mutually exclusive states. This was the paradox.

Subatomic polytheistic perspective challenges notion of monotheistic space-time continuum

On the subatomic level, the perspective of the observer determined the nature of reality, rather than vice versa. This upended the traditional perspective that there was an objective reality out there just waiting to be discovered. Truth was relative to perspective rather than absolute. There were now two truths rather than one. The subjective implications were unacceptable to those who demanded one absolute objective truth. This polytheistic perspective was particularly disturbing to Einstein, as it implied that there was a discontinuous tear in his monotheistic space-time continuum. He had tied the package together with a neat bow, only to find that there was an unexplainable hole in the fabric. He spent the remainder of his career in an unsuccessful attempt to repair the paradoxical gap.

Uncertainty Principle: A Limit to Knowledge rather than Absolute Certainty

Part of the problem in resolving the paradox had to do with the Heisenberg Uncertainty Principle. The mathematical principle stated that knowledge of position and dynamics were inversely related in terms of the electron. In other words the more that one knew about the position of an electron, the less one could know about its dynamics. Further, since Heisenberg had derived his formula, the uncertainty constant had appeared in myriad diverse circumstances. This inner connectivity enhanced the credibility of the theory that there is an absolute limit to our knowledge. This viewpoint totally contradicted the prior scientific mindset that absolute knowledge was attainable. This attitude was entirely believable after Mechanics uncovered the universal principles that govern the atom. As the atom was believed to be the ultimate particle - the building block of matter, this seemed to be the end of knowledge. With the advent of the electron, the facade of absolute certainty was replaced with absolute uncertainty.

Bohr & Von Neumann: Either Dynamics & Causation or Position & Definition

Bohr took the implication of the Uncertainty Principle to a new level of ambiguity. He reasoned that if knowledge of position and dynamics are inversely related, then description and causation are also inversely related. The more precise our description of position the less we could know about the dynamics. Yet dynamics indicates the relationship between things. As such dynamics is intimately tied with causation and meaning. The more precisely position is described, the less we know about causation - content versus process. Von Neumann, arguably the top mathematician of the 2nd half of the 20th century, proved this mathematically.

Due to Subatomic Holes, Continuous Equations demoted from Philosophical Metaphor to Accurate Model

This viewpoint totally contradicted the traditional certainty of Mechanics. His continuous equations combined with the initial conditions could precisely define the exact position and dynamics of any object, including atoms, at any point in time. By challenging the possibility of absolute knowledge with his principle of complementarity, Bohr also challenged the supremacy of the continuous equation as a philosophical metaphor. Rather than reflecting the innate nature of the universal order, these equations were demoted to fancy models. While an accurate reflection of the atomic world, the continuity was filled with holes on the subatomic level. Look closely enough and paradox and ambiguity emerge. It is no wonder that there was such an uproar – the very foundations of our Western ethnocentric arrogance were being challenged.

Mechanics & Probability wedded as Modern Physics

Complementarity Principle confirms traditional relationship between Mechanics & Probability

The uproar, however, died out relatively quickly. For one, Bohr's principle of complementarity only confirmed the traditional relationship between Mechanics and Probability. Mechanics as a system of dynamics had supplied the explanatory power in terms of causation. Probability as a static system had only supplied descriptions. Although Probability was required to plug the gaps, his tools are primarily employed as a computational tool, not as an explanation of causation. Probability's main philosophical contribution is the innate ambiguity and paradox at the heart of matter. Although amplified by the findings regarding the subatomic universe, ambiguity is an innate feature of the games of chance that gave rise to the laws of Probability. Further, Gödel proved that for a system to be complete it must contain paradox. Thus by introducing paradox Probability completed the system of Physics as an explanatory tool.

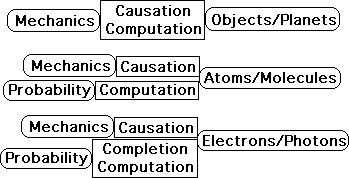

Diagram: Development of relationship between Mechanics & Probability

The following diagram illustrates the development of the relationship between Mechanics and Probability.

Mechanics provides both the explanations of causation and the computational power when objects are large enough to be seen with the eye, such as planets and balls. Mechanics still provides the causal mechanisms when the objects are invisible to the naked eye. This includes both atomic and subatomic particles. Probability is required to provide the computational power for the uncountable numbers of microscopic particles. In the subatomic realm of electrons and photons, Mechanics requires Probability to fill in the gaps in his theory. As such Probability completes the explanatory picture.

Merger of Probability and Mechanics predicts the behavior of pure matter

Despite the inherent philosophical uncertainty that this solution introduced, Physicists could now accurately predict the behavior of pure matter from the level of the electron and proton all the way up to the galaxies and everything in-between. Probability was used to perform the computations for the data sets of eternally identical atomic particles. When the matter became big enough, Mechanics (classical Physics) with his continuous equations took over. Physicists proudly claimed that they could predict the behavior of matter on a continuum from the microscopic to the macroscopic. Probability and Mechanics were now wedded forever as modern Physics. The abilities of both were required to describe the behavior of pure matter. However when pure matter was polluted with life, this merger proved helpless. For instance, Physics has a hard time predicting where a chicken will land when tossed into the air.

Scientific Reductionism and building block reasoning

Intoxicated by the explanatory and predictive powers with regards to pure matter of this merger of classical Physics and Probability, the followers began claiming that they were on the verge of predicting the behavior of everything. A classic logical chain of scientific reductionism goes as follows: “We can predict the behavior of the subatomic world, which provides the ‘building blocks’ of the atomic world, which provides the ‘building blocks’ of the material world, which provides the ‘building blocks’ of the Universe. Therefore we can predict the behavior of the Universe. We just have a few details to work out.”

Field of Action determines the nature of the Explanation, not Reductionism

This line of reasoning is confuted by the notion that the field of action determines precedence, not the building block/fundamental principle mentality. For instance, while Physics informs us about all the incredible details of resonance, it tells us virtually nothing about the music of Bach’s Brandenburg Concertos. In a similar fashion, the laws of Material Science provide Biology with some inescapable constraints. While these forms supply the essential structure that enables the development of the complexity required of living systems, they do not determine meaning, the Music of Life, anymore than the laws of resonance reveal anything about the meaning of music. The field of action determines the nature of the explanation. While underlying structure enables essential complexity, it does not determine meaning.

Ambiguity & Paradox swept under rug to maintain illusion of Certainty

All the subtle concepts introduced by subatomic particles, the ambiguity and paradox, were swept under the rug to keep them out of sight. The scientific community didn’t want this uncertainty to taint in the triumphant union of Mechanics & Probability. This union is an explanatory and computational tool that could describe the behavior of matter almost completely – the operative word being ‘almost’. The arrogance of certainty emerged almost immediately after the hubbub died down. After all Physics could confidently claim that he could totally explain and compute everything that really matters - especially if all that matters is matter. Living matter is another story.

Probability paves the way for the Living Algorithm.

Living Algorithm’s Process complements Probability's Content.

How does this discussion apply to the Living Algorithm’s mathematical system of digesting information? Like Mechanics, the Living Algorithm provides a system of dynamics. As a system of dynamics the Living Algorithm provides explanatory power that Probability, as a static system, can never hope to provide. While probabilistic descriptions provide boundaries, they can't possibly reveal underlying meaning. This was one of the insights of Bohr's complementarity principle. It was possible to know either process or content, not both. This is another reason that the two are complementary systems. The Living Algorithm reveals the patterns of the data stream’s process, while Probability reveals the content of the data set.

Probability dethrones Continuity, setting the stage for Living Algorithm’s digital system.

Before Probability was required to plug the subatomic holes, continuous equations reigned supreme both scientifically and philosophically. With Probability's ascent, the importance of continuous equations as a philosophy waned. They became a great model rather than a definitive feature of the Universal Substance. This dethronement of continuity opened the door for the Living Algorithm’s system of dynamics, which is digital.

Photons: an elemental Information Pulse in Living Algorithm System

Although the Living Algorithm system has very little in common with the material world from the atom on up, the Living Algorithm’s patterns have much in common with subatomic wavicles. Schrödinger’s equation transforms the electron from a particle to a wave. Born's mathematical resolution transforms the material wave into a probability wave. Bohr's interpretation implies that probability is information more than material. Hence the electron and photon become information waves, packets or pulses of information. The Living Algorithm’s basic manifestation is as a pulse of information - the Creative Pulse, a.k.a. the Pulse of Attention. In fact, the eyes sense the individual photon as one of the Living Algorithm’s fundamental information pulses.

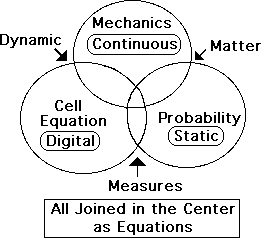

Diagram: Interlocking Triad of Systems: Mechanics, Probability & Living Algorithm

This analysis suggests that the triad of Mechanics, Probability and the Living Algorithm form a comprehensive interlocking system that incorporates both the static and dynamic nature of matter and life. Each mathematical system is necessary to explain and analyze different parts of the puzzle. There would be a conspicuous gap if any of the systems were excluded. The similarities and specialties are shown in the following diagram.

The bowed triangle in the center is where the three sets intersect as equation-based systems. The systems of Mechanics and Probability intersect as studies of the material world. Mechanics and the Living Algorithm intersect as systems of dynamics. Probability and the Living Algorithm intersect as types of measures. Each of the mathematical systems is unique in it’s own way. The Living Algorithm is digital; the equations of Mechanics are continuous, and Probability's static.

Living Algorithm Speciality: Dynamic Relationships between Moments

The Living Algorithm’s field of action is the ongoing dynamic relationship between the individual data points in a stream. This focus on individual interactions gives rise to some intriguing features that are inaccessible to Probability’s universal and static focus. Let’s enumerate a few.

Data Stream Dynamics

The Living Algorithm focuses upon dynamic interactions. Accordingly, mechanistic concepts, such as force, energy, work, and power, can be applied to data streams. Because Probability's data sets are static, they have none of these dynamic features. Because of her dynamic nature, the Living Algorithm provides meaning and causality, which Probability, due to his innate fixed nature, can't. The next volume in the series Data Stream Dynamics provides an in depth analysis of the Living Algorithm’s dynamic nature.

Links

We’ve made references to the Living Algorithm System’s dynamic nature. This dynamic feature is due to the Living Algorithm’s ability to characterize individual moments in a data stream by relating data points to each other. How does she accomplish this unusual, yet special, feat? To find out the answer, read the next article in the stream – Mathematics of Relationship.

To see why this is one of the Living Algorithm's ordeals find out why Life yearns for a Mathematics of Relationship.