- Living Systems require New Mathematics of Data Streams

- Data Stream Mathematics must address Life’s Immediacy

- Data Stream Mathematics must include ongoing Predictive Descriptors

- Precision & Relevance Incompatible

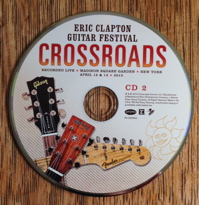

Have you ever considered how we translate the impersonal digital information of 1s and 0s into personal knowledge that is relevant to our existance? For instance, how are we able to derive 'music' from our favorite CD? How is it that we dance wildly or cry uncontrollably when we hear a sequence of 1s and 0s that can't even touch each other? What is the translation process that bridges the infinite chasm between these 2 simple numbers?

I am excited to present a plausible theory that accounts for our personal connection to the impersonal digital sequences contained on our CDs, DVDs, computers and IPhones. The process that seems to enable connectivity is contained in a mathematical system labeled Information Dynamics. The theory concerns how living systems digest digital information to transform it into a form that is meaningful to Life. While the information processing epitomized by a computer is of necessity static, exact and fixed, living information digestion is of necessity dynamic, approximate and transformational. Think of the difference between a baby and a computer.

Our initial monograph illustrated some of the many patterns of correspondence between the mathematical processes of Information Dynamics and empirical reality. These include the harmful effects of Interruptions to the Creative Process, the negative impact of Sleep Deprivation, the Necessity of Sleep, and even the Biology of Sleep.

These striking correspondences evoke some distinct questions. Why does the mathematical model behave in similar fashion to experimentally verified behavioral and biological reality? Could these correspondences be a mere coincidence, some kind of odd artifact? Or perhaps the striking patterns are due to some yet as undiscovered molecular/subatomic mechanism? Or could these odd correlations between mathematical and living processes be due to the process by which living systems digest information?

We chose to explore the last theory. The first question we posed ourselves: What kind of information digestion process would a living system require? What are the entry level requirements?

The following essay addresses three questions. Why do living data streams best characterize the dynamic nature of living systems? Why do data streams require a new mathematics? And what requirements must this data stream mathematics fulfill if it is also to be the mathematics of dynamic living systems?

Living Systems require New Mathematics of Data Streams

Dr. Zadeh calls for New Mathematics to characterize Biological Systems

The highly respected Dr. Lotfi Zadeh is considered to be the father of the well-established fuzzy logic approach to engineering. Dr. Zadeh co-authored the first book on linear systems theory in 1963, which immediately became a standard text for every engineering school. His prestige in the scientific community was sealed with this publication. Yet, he was already moving in a contrary direction. He was grappling with the difference between living systems and material systems. Most of his colleagues, in their attempt to accurately characterize the features of living systems, were pursuing a course that attempted to apply the mathematics of inanimate systems to what were becoming known as animate systems. The effort to capture the unique features of animate systems with conventional mathematics resulted in ever-greater levels of complexity and precision. He sensed that this effort by his colleagues to distinguish between 'animate' and 'inanimate' systems was conceptually misguided. He had begun to realize that living systems are qualitatively different from non-living systems; and this difference cannot be captured by the mathematics of inanimate sets – no matter how complex. He recognized that a new mathematics was required to articulate this qualitative difference. He published a paper in 1962 entitled “From Circuit Theory to System Theory” that foreshadowed his new perspective.

“There are some who feel this gap [between 'animate' and 'inanimate' systems] reflects the fundamental inadequacy of the conventional mathematics – the mathematics of precisely-defined points, functions, sets, probability measures, etc. – for coping with the analysis of biological systems, and that to deal effectively with such systems, which are generally orders of magnitude more complex than man-made systems, we need a radically different kind of mathematics, the mathematics of fuzzy or cloudy quantities which are not describable in terms of probability distributions.” (Dr. Bart Kosko, Fuzzy Thinking p.145 quoting from Dr. Zadeh’s paper.)

Biological Systems Orders of Magnitude more complex than Inanimate Systems

Dr. Zadeh reminds us that biological systems are an order of magnitude more complex than inanimate systems. This is neither to diminish the complexity, nor the conventional explanatory power of math or physics. But when we want to make meaningful statements about these biological systems with their attendant complexity, what can be said with the traditional standard of precision is limited. For instance, when we think about the creative act of writing, we realize that the invention and expression of complex ideas has a reality that goes far beyond the ability to electrically map the brain.

Example: Complexity of variables associated with writing and publishing staggering

We may be able to identify the electrical patterns of the brain with a high level of precision and still know very little about the nature of the ideas. The nature of ideas would certainly include elements such as invention, expression, relation and evaluation. Any satisfactory explanation of these elements must go beyond mapping electrical patterns. We need to grasp very nuanced meanings that come from a nuanced understanding of context. Yet, this order of magnitude of complexity only addresses the individual writer. When we consider some of the other relevant social variables connected with writing – editors, agents, publishers and the reading public, the magnitude of complexity of interaction of these living systems is staggering. To gain insight into how a particular author invents, expresses and interacts with others, we need to know about the particular subtleties that are at work in that particular case. This example is one illustration of Zadeh’s notion that biological systems have a level of complexity that far exceeds that of inanimate systems.

"Probability distributions" accurately characterize the fixed data sets of inanimate systems

Dr. Zadeh makes an important distinction between 'animate' and 'inanimate' systems. Fixed data sets typify 'inanimate' systems – systems which do not change with time and whose members are ”precisely defined”. The ”probability distributions of conventional mathematics” work extremely well when dealing with these fixed data sets. The mathematics of probability processes this data to produce familiar measures, such as mean and standard deviation, that accurately characterize the features of such a set. The analysis of these static and fixed data sets is a perfect way to discuss the general features of a fixed and static population – an 'inanimate' system.

Fixed data sets inappropriate for the study of ‘animate’ systems

In contrast, when we consider the dynamic nature of biological systems, the precisely defined data set, with its attendant “conventional mathematics” of probability, is inappropriate. The appropriate approach must address the fact that an organism, by definition, is in a continually changing state that is neither fixed, nor can it be precisely defined. Living systems move through time and space, constantly monitoring and adjusting in order to enhance the possibility of survival (as well as the achievement of any other higher order goals). It is this aspect of “biological systems” that scientists hope to capture through an analysis of animate systems. The new mathematics of animate systems must somehow articulate this qualitative difference between the static and dynamic features of existence.

Living Data Streams & the New Mathematics

There is another way of looking at data that better reflects the dynamic nature of living systems. This new method requires living data sets – which we choose to call data streams. The mathematics of living data streams must somehow address the information flow of an inherently growing data set. Only in this manner will the new mathematics be able to describe the complex ongoing relationship between organism and environment. Any animate system that hopes to simulate Life's dynamic nature must incorporate this new mathematics of living data streams.

Data Stream Mathematics must address Life’s Immediacy

This new mathematics that addresses the data streams of living systems must go beyond traditional methods of characterizing data. Conventional probability computes a single mean or standard deviation to characterize an entire set of data, where each set member is weighted equally. The new mathematics of living data streams should not weight all members of the data stream equally. The most relevant information regarding the well being of an organism is generally the most current information. Although past experience certainly has relevance, more recent changes in conditions are likely to have an immediate impact upon the well being of the organism. For instance, the average temperature for the day might be 65º, but the more relevant information to an organism is that the current temperature is 32º. A mathematics that weights recent input more highly is required to make meaning out of living data streams.

Data Set Mathematics trivializes Data Streams

The nature of mathematical meaning as applied to this new interpretation of data streams is going to be qualitatively different from the nature of mathematical meaning for a fixed data set. Traditional probability distributions are an excellent articulation of the general meaning of fixed data sets. But this excellent articulation of overall averages inherently undervalues whatever significance may lie in the pattern of immediately preceding events. Therefore the power of the conventional mathematics breaks down when it is not addressing a fixed set with equivalent members. In fact, the only way that conventional mathematics can address a living data stream is by adding the new data to the existing fixed set; and then treating the new set as an enlarged, yet fixed set, where all members are equivalent. This traditional approach trivializes the significance of particular moments in a dynamic data stream.

Data Stream Mathematics must weight most recent input more heavily

Probability theory inherently ignores the immediacy of events. Probability is a big picture specialist. It characterizes the nature of the entire set, and therefore undervalues the significance of the most recent environmental input. In contrast, living systems typically find it pragmatic to weight recent experience more heavily (see above), rather than taking all the members of the set equally into account when making computations. In short, the “probability distributions of conventional mathematics” are appropriate for dealing with fixed and permanent data sets (where every member is weighted equally). However, traditional data set mathematics is inappropriate for dealing with dynamic ongoing data streams (where the members are weighted in proportion to the proximity to the most recent data point). As such, a brand new type of mathematics is needed – one that specializes in living data streams. This data stream mathematics must somehow take life’s immediacy into account by weighting the most recent points more heavily.

Data Stream Mathematics must include ongoing Predictive Descriptors

New Mathematics needs new measures that describe the moment

A mathematics that addresses immediacy will inevitably produce new measures that focus our attention on a particular moment, or series of moments, in the data stream. Because this mathematics applies to data streams not data sets, these new measures must be qualitatively different from traditional measures. To address the importance of recent events, these measures must describe the nature of the most current moments. These measures sacrifice big picture averages in order to more accurately describe the momentum of the moment. Probability inherently sacrifices the uniqueness of a particular moment by incorporating the individual data into an overall average of the entire data set. Traditional measures, such as the Standard Deviation and the Mean Average, are therefore inadequate descriptors of a living data stream. Rather than measures that describe the entire population, Data Stream Mathematics requires descriptive measures that focus upon the immediacy of the moment.

Descriptive measures must also be predictive

To be useful to the organism these descriptive measures must include a predictive component. Probability's descriptive measures (the mean average and the Standard Deviation) allow scientists to make well-defined predictions regarding general populations. For instance, Probability theory can predict the behavior of billions of subatomic particles with an amazingly high degree of precision. Similarly the data stream descriptors must allow us to make meaningful predictions about the next point in the stream. Making accurate descriptions about the most recent moments in the data stream enables the organism to make probable statements about the future. Probable statements provide timely information to the organism. Absent this information about the nature of a particular moment(s) in time, the organism would be flying blind as it confronted the environmental data stream. In essence, these probable statements serve two functions: 1) to predict environmental behavior with greater accuracy, and 2) to utilize this information in determining a more appropriate response. Any data stream mathematics that hopes to simulate living systems must include descriptors of particular moments in time that also allow us to make useful predictions about the next point in the stream.

Predictive Statements about Data Streams likely to ‘Fuzzier’ or Suggestive

The predictive statements derived from these desired data stream measures are, however, likely to look ‘fuzzier or cloudier’ than the predictive statements derived from traditional data set measures. The meaning is likely to be 'fuzzy or cloudy' in that the predictions will be suggestive rather than definitive. Practical considerations limit the predictive accuracy of the data stream's ongoing measures. The predictive statements of Probability mathematics are going to be definitive because they are applied to fixed data sets, which, by definition, never change. The predictive statements of data stream mathematics are likely to be fuzzier or suggestive because they are applied to a living data stream, which, by definition, possesses the capacity for constant change.

New Data Stream Math trades probabilistic rigor for relevance of the moment

The new data stream mathematics will not satisfy the traditional predictive rigor demanded by Probability. Yet, this new mathematics will complement traditional approaches by providing more powerful predictors about the immediate behavior of the data stream. The predictive power provided by these data stream measures may well be more significant than overall statements about a growing (yet fixed) data set. A mathematics that weights the immediacy of moment(s) can be a more useful predictor than traditional Probability mathematics (where all members of a fixed set are weighted equally). This new mathematical meaning may not fulfill the criteria for predictive precision demanded by the mathematics of probability; but what it lacks in predictive precision, it more than makes up for by focusing upon the relevance of more recent events.

Data Stream Predictors have great relevance for Living Systems

Are these data stream predictors relevant to living systems?

Clearly there are times when an organism benefits from a heightened awareness of the immediate environment. Living systems are often required to make instant responses to ongoing environmental input – in essence, data stream(s). The changing conditions inherent in the data streams of living systems often require an urgent response. The potential urgency of any reaction requires a flexibility of interpretation and response that is sensitive to the momentum of the moment, or series of moments. There is particular relevance to the organism’s ability to predict the probable momentum of an ongoing series of experiences. Probability’s preoccupation with the general features of a fixed data set fails to capture the momentum of recent events. Any Data Stream Mathematics that hopes to simulate this feature of living systems must somehow provide predictive descriptors that characterize the probable momentum of the moment. As we shall see, this characterization should also reveal the emerging pattern(s) in a series of moments in the life of an organism.

Suggestive Predictions: Relevance?

This analysis of two complementary mathematical systems raises some significant questions. What level of precision is reasonable to expect from the predictive measures of data stream mathematics? Does the distinction between data sets and data streams suggest the possibility of two different standards of precision when analyzing data? Can we tolerate two different standards of precision when analyzing data? If the level of predictive accuracy for data stream mathematics is viewed as substandard by conventional mathematics, can it still be useful? Is accuracy that is suggestive, yet not definitive, a useful predictor? For some preliminary answers to these questions, read on.

Precision & Relevance Incompatible

Conventional mathematics may frown skeptically when confronted with the suggestion that not all significant patterns in Life can be characterized by the precise standards of Probability. However, this presumed imprecision of data stream mathematics turns out to be an asset, not a liability.

Zadeh's Principle of Incompatibility

In terms of the organism’s predictive powers, precision and meaning are inversely proportional – the more precision, the less meaning, and vice-versa. This notion may seem counter intuitive, yet in his study of system theory the aforementioned Dr. Zadeh turns this concept into a principle - the principle of incompatibility:

“{Zadeh] saw that as the system got more complex, precise statements had less meaning. He later called this the principle of incompatibility: Precision up, relevance down.” (Kosko, Fuzzy Thinking, p. 145, 1993)

In 1972, Zadeh articulated the principle even more clearly. Kosko quotes Zadeh:

"As the complexity of a system increases, our ability to make precise and significant statements about its behavior diminishes until a threshold is reached beyond which precision and significance (or relevance) become almost mutually exclusive characteristics. … A corollary principle may be stated succinctly as, "The closer one looks at a real-world problem, the fuzzier becomes the solution." (Fuzzy Thinking, p. 148, 1993)

If it is true, as Dr Zadeh argues, that real world problems require fuzzy solutions, data stream mathematics may provide a method to explore this fuzziness.

Life's Immediate Meaning Incompatible with Probability's Precision

The concept behind Zadeh's Principle of Incompatibility helps explain why the traditional laws of Probability find Life's Immediacy perplexing. Probability is far more comfortable dealing with what is familiar – his specialty. He feels most at ease with fixed, unchanging data sets where all members are functionally equivalent. Perhaps his desire for the comfortable, yet rigid, precision of conventional mathematics presents an insurmountable challenge to understanding the complexity of Life's immediate meaning. For Life to have her spontaneous immediacy appreciated, she may have to search elsewhere for a mathematical partner.

Perhaps understanding the immediacy of the moment requires a partner that relates better to a data stream. While this tradeoff sacrifices the comfortable predictability of the more traditional relationship, it offers her a freshness that comes from more accurately understanding the meaning of the moment(s) – her most subtle nature. As we shall see, the suggestive predictors of Data Stream Mathematics with their relative imprecision are an ideal match for characterizing her meaning of the moment – Life's Immediacy.

Summary, Questions & Links

The esteemed Dr. Zadeh makes the claim that ‘conventional mathematics’ with its ‘precisely defined probability measures’ is inadequate for ‘coping with the analysis of biological systems’. He further states that a new ‘mathematics of fuzzy or cloudy quantities’ is required ‘to deal effectively with such systems’. We suggest that a productive step in this direction is the study of living data streams, rather than fixed data sets. Living data streams reflect life’s ongoing and immediate nature. A mathematics of living data streams may uniquely capture these characteristic aspects of biological systems.

Questions

This new approach, however, has strict requirements. Is it reasonable to expect that a mathematics of data streams will satisfy all of the criteria discussed above? Is it reasonable to expect this method to be: 1) current, 2) self-reflective of immediately preceding experience, 3) responsive to pattern, 4) sensitive to any change in context, and 5) pragmatically predictive? What metaphor, other than mathematics, is capable of systematically relating all these variables of living systems? What will this new mathematical metaphor look like? And if a mathematical metaphor can effectively relate these criteria, could this metaphor actually be a mechanism that living systems employ to process data streams? Is mathematics the information processing language of living systems?

Links

For some suggestions as to the answers to these questions read the next article in this series - Living Algorithm System.

Click on this link to discover a little about the Author's decades-long relationship with data streams.